- #ZED CAMERA OPENCV IMPLEMENTATION TO PROCESS POINT CLOUDS HOW TO#

- #ZED CAMERA OPENCV IMPLEMENTATION TO PROCESS POINT CLOUDS CODE#

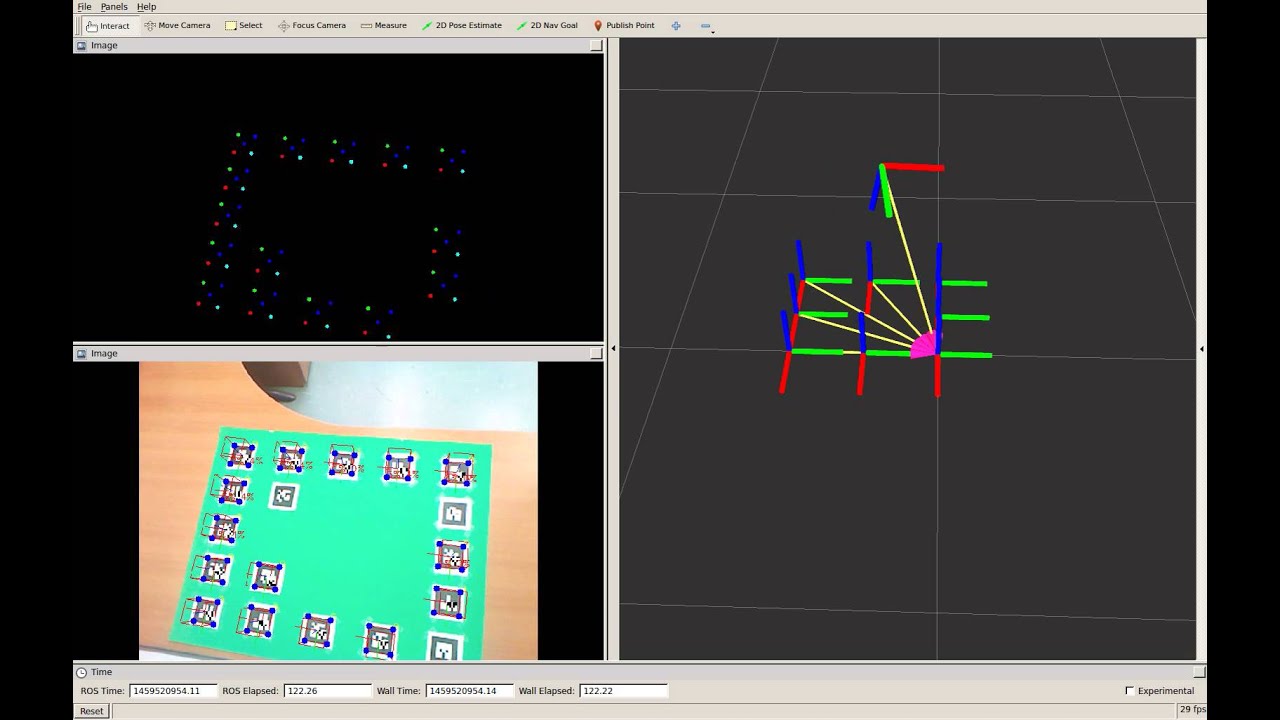

ros2 ros2-wrapper slam stereo-vision zed-camera. This point, the representant of the cell, can be chosen in different ways. For each cell of this grid, we will only keep one representative point. Official implementation of 'Walk in the Cloud: Learning Curves for Point Clouds Shape Analysis'. The grid subsampling strategy will be based on the division of the 3D space in regular cubic cells called voxels. Here is a snapshot of my point cloud of scene. Point cloud hybrid voxel engine designed for very large spatial data processing and location intelligence in real time. The 3D visualizer code, located in cloud exe. The image and point cloud of scene share the same space. The component is self contained, and utilises ROS to subscribe to and publish RGB image data. svo files) how could I get the point cloud using the SDK if I am not able to use a graphic interface? I am working from a DGX workstation by using ROS (Melodic and Ubuntu 18.04) in Docker and I am not able to make rviz and any graphic tool to work inside the Docker image, so I think I should do the point cloud generation "automated", but I don't know how. I have an image from camera, 3D point cloud of scene and camera calibration data (i.e. HTH EDIT: Please refer to slides 16-19 in this presentation.

#ZED CAMERA OPENCV IMPLEMENTATION TO PROCESS POINT CLOUDS CODE#

If not, then you will need to implement your own based on the source code given in transforms.hpp.

#ZED CAMERA OPENCV IMPLEMENTATION TO PROCESS POINT CLOUDS HOW TO#

svo format, and I don't know how to generate it.ĭoes it exist some way to obtain ".svo" videos from rosbags?Īlso, I would like to ask, (once I get the. 1 Answer Sorted by: 0 I am assuming you have your point-clouds stored in a PCL compatible format then you can simply use pcl::transformPointCloud. You can see an example at the top of the page. And I want to convert the depth 2D image into a point cloud where each pixel is converted into a. One utility that comes with the package, imagestopointcloud, that takes two images captured with a calibrated stereo camera pair and uses them to produce colored point clouds, exporting them as a PLY file that can be viewed in MeshLab. I know the following parameter of the camera: cx, cy, fx, fy, k1, k2, k3, p1, p2. I use a 3D ToF camera which streams a depth 2D image where each pixel values are a distance measurement in meters. My problem comes when I extract the images and I create the videos from them, what I get are the videos in some format (e.g.mp4) using ffmpeg, but the ZED SDK needs a. From depth 2D image to 3D point cloud with python. The ZED 2 and ZED 2i provide magnetometer data to get better and absolute information about the YAW angle, atmospheric pressure information to be used to estimate the height of the camera with respect to a reference point and temperature information to check the camera health in a complex robotic system. What I have now is some rosbags with left and right images (and other data from different sensors). I would like to generate a point cloud from stereo videos using the ZED SDK from Stereolabs.

0 kommentar(er)

0 kommentar(er)